In the dynamic realm of data management, mastering the craft of writing robust SQL scripts is essential for maintaining data integrity and optimizing performance. This article emphasizes the importance of understanding foundational database concepts, such as relational models and data types, before delving into best practices for writing clear, maintainable code. We will cover effective error handling techniques, including transaction controls like COMMIT and ROLLBACK, and explore optimization strategies through indexing and query profiling. Lastly, we will stress the critical need for thorough testing in staging environments, fostering a culture of continuous improvement among developers to create bulletproof SQL scripts.

Mastering Database Failover for Business Continuity

In today’s data-driven landscape, the uninterrupted availability of databases is not just a luxury but a necessity for organizations of all sizes and industries. Database failover refers to the process by which a database automatically or manually switches to a standby database, server, or cluster whenever there are hardware failures, software issues, or other operational interruptions, ensuring that users can maintain seamless access to essential services and data.

The significance of database failover lies in its ability to minimize downtime and maintain business continuity, which is critical in an era where delays can result in significant financial losses and diminished customer trust. Understanding the nuances of effective failover mechanisms is essential for database administrators and IT managers tasked with safeguarding their organizational data against unexpected disruptions.

Choosing Between Synchronous and Asynchronous Replication

One of the first considerations when developing a robust failover strategy is the choice between synchronous and asynchronous replication methods. Synchronous replication ensures that data is written to both the primary and standby databases simultaneously, maintaining data consistency but potentially affecting performance due to the latency involved, particularly in geographically distributed environments.

On the other hand, asynchronous replication allows data to be written to the primary database first, with changes subsequently propagated to the standby database, offering performance benefits at the potential cost of data consistency during a failover event. Assessing the organization’s specific needs, including tolerance for data loss and the potential impact of latency on user experience, is crucial to selecting the appropriate replication strategy.

Enhancing Response Times with Automated Failover

Alongside replication, employing automated failover solutions can significantly enhance organizations’ response times in the event of failures. Automated failover systems can monitor the health of the primary database and initiate a switch to the standby system without the need for human intervention, reducing the response time to milliseconds rather than hours. This automation not only minimizes downtime but also alleviates the burden on IT staff, allowing them to focus on more strategic initiatives.

However, even the most sophisticated failover mechanisms can fail if they are not regularly tested and validated. Implementing a rigorous testing protocol is essential for ensuring the effectiveness of failover processes. Regular failover drills, in which simulated failover scenarios are conducted, help identify potential weaknesses and areas for improvement within the failover framework.

Testing, Validation, and Continuous Improvement

During failover drills, organizations should assess not only the speed and reliability of the switch to the backup system but also the performance of the applications and user experience post-failover. Performance assessments following failover events are equally critical; they help organizations understand how well the system can handle the load during peak times and ensure that the standby system can perform adequately under operational stress.

The importance of ongoing evaluation cannot be overstated. Continuous enhancements to the failover strategy can significantly improve database resilience over time, reducing vulnerabilities and strengthening business continuity plans.

Integrating Disaster Recovery with Failover Strategies

In conjunction with practical failover strategies, having a well-structured disaster recovery plan that encompasses failover procedures is vital. This plan should address crucial aspects such as data integrity, backup protocols, and restoration procedures to ensure that data can be recovered to a specific point in time and that business operations can resume with minimal disruption.

Organizations must establish protocols for routinely backing up data, both on-site and off-site, and define clear roles and responsibilities for IT personnel during a failover event. A comprehensive disaster recovery plan ensures that organizations are better positioned to respond promptly to unplanned outages and minimize the risk of data loss.

Emerging Trends: Cloud Failover and AI Monitoring

Looking forward, the field of database failover technologies is evolving rapidly, driven by advancements in cloud computing and artificial intelligence. Cloud-based failover solutions offer a flexible, scalable alternative to traditional on-premises setups, allowing organizations to leverage the power and accessibility of the cloud to ensure high availability. These cloud solutions often come with built-in redundancy and automated failover features that simplify implementation and improve reliability.

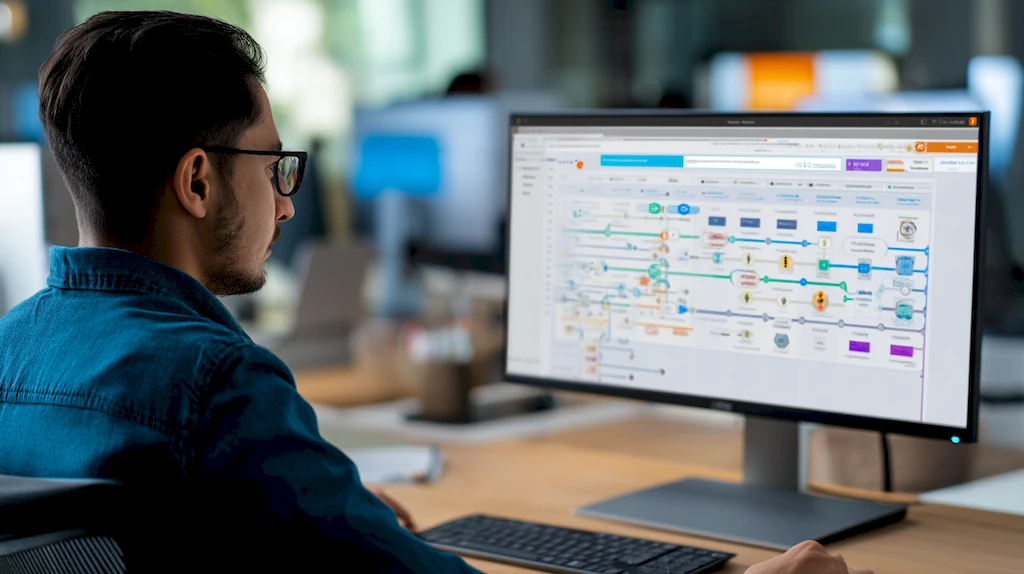

Furthermore, AI-driven monitoring tools are being developed to predict potential failures before they happen. By utilizing machine learning algorithms, these tools can analyze historical performance data, monitor system health in real-time, and provide actionable insights, enabling DBAs to anticipate issues and address them proactively before they impact operations.

Sharding, the practice of distributing data across multiple servers to optimize database management, is a…

Daily habits play a crucial role in enhancing the efficiency and effectiveness of database administrators…

Buffer pool memory plays a crucial role in database management systems, significantly optimizing performance by…

About The Author

Max Carrington is a seasoned NoSQL Database Administrator with over 9 years of experience in the field, specializing in optimizing databases for performance and reliability. His expertise lies in managing complex data systems and implementing innovative solutions that drive efficiency. Outside of his technical role, Max contributes to the online community through his work with The Real Mackoy, a collaborative platform that unites a diverse group of bloggers. Learn more about Max and his contributions at The Real Mackoy, where he helps showcase the talents of those who truly embody the essence of the internet’s best.