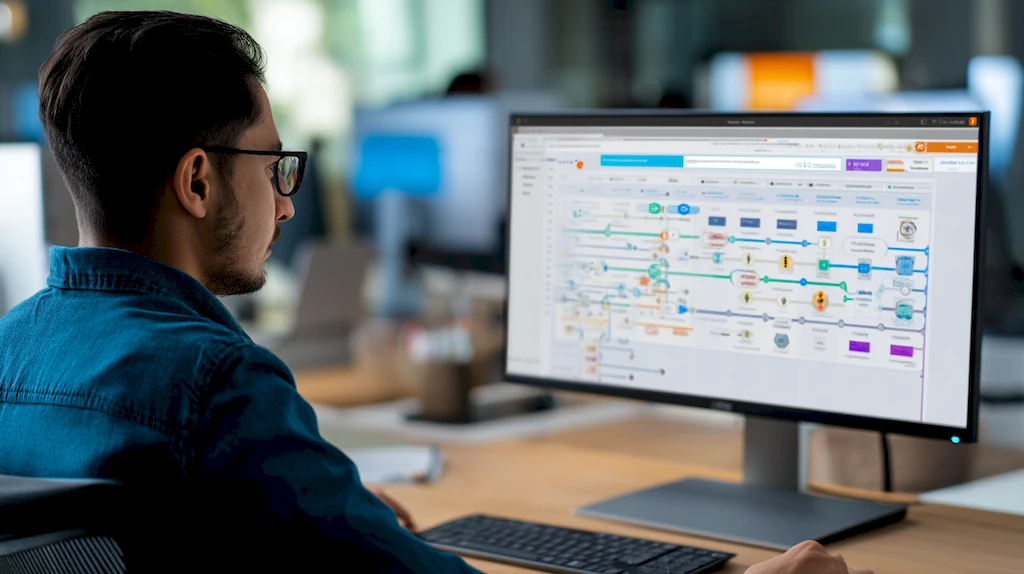

Managing over 100 database servers can be a daunting task for database administrators, but effective monitoring is crucial for ensuring optimal performance and uptime. This article explores the unique challenges of database management, the selection of appropriate monitoring tools, and the essential metrics to track for proactive oversight. By implementing a centralized dashboard, establishing regular routines, and leveraging automation, DBAs can streamline their monitoring processes. Furthermore, best practices for scaling and continuous improvement will be highlighted, empowering DBAs to maintain control and peace of mind while managing complex database environments.

When to Normalize, When to Denormalize: A Practical Approach

In database management, normalization and denormalization are fundamental concepts that every administrator and developer must master to balance data integrity, performance, and scalability. Normalization is the process of organizing data to reduce redundancy and improve consistency, typically by following a set of formal rules known as normal forms (such as First Normal Form, Second Normal Form, and Third Normal Form). Denormalization, in contrast, involves selectively introducing redundancy to optimize database performance, particularly for read-heavy or reporting-focused systems. Understanding when and how to apply each approach is critical to designing systems that are both reliable and efficient over the long term.

Normalization plays a vital role in ensuring data accuracy and integrity, especially in transactional systems where updates and inserts occur frequently. By structuring data carefully—ensuring that each fact is stored only once and that non-key attributes are functionally dependent on primary keys—databases become easier to maintain and less prone to anomalies such as update, insert, or delete errors. In environments such as banking systems, healthcare databases, or any system prioritizing ACID compliance, normalization is essential for maintaining trust in the data over time.

However, not all workloads benefit equally from strict normalization. Denormalization can be a strategic choice in scenarios where rapid data retrieval outweighs the risks of redundancy. By consolidating related data into fewer tables, denormalized designs reduce the need for expensive joins and aggregation queries, dramatically speeding up reporting, analytics, and dashboard generation. Data warehouses, business intelligence platforms, and e-commerce websites under heavy user load often employ denormalization techniques to meet performance expectations.

Choosing between normalization and denormalization depends on a range of factors, including application requirements, database performance characteristics, and anticipated scalability needs. High-frequency transactional systems, where data changes frequently and accuracy is paramount, will favor normalized designs. Systems with predominantly read-heavy workloads, where performance and speed are critical, may lean towards denormalization to improve user experience.

Guidelines for Choosing Normalization

- Applications require frequent updates, inserts, and deletes.

- Data integrity, consistency, and compliance are high priorities.

- Complex data relationships need to be maintained cleanly over time.

- The cost of maintaining redundant or inconsistent data would be too high.

- Strict enforcement of ACID properties is a business or regulatory requirement.

Guidelines for Considering Denormalization

- Application performance is limited by frequent, expensive joins across normalized tables.

- Data is read much more frequently than it is written or updated.

- Fast aggregation and reporting are essential to user workflows or business intelligence processes.

- Data consistency risks are acceptable, and redundancy management strategies are in place.

- Latency reduction is prioritized over strict normalization constraints.

Case studies highlight how businesses adapt these strategies based on real-world challenges. For example, a retail analytics platform migrated portions of its reporting database to a denormalized schema, dramatically improving dashboard load times during peak holiday traffic. Meanwhile, a financial institution maintained strict normalization in its transactional core systems to uphold data reliability, using a separate, denormalized read replica for reporting purposes—a hybrid approach that effectively combined the strengths of both methodologies.

Ultimately, normalization and denormalization are tools, not doctrines. Each brings distinct strengths and trade-offs, and the best database designs often incorporate a thoughtful balance of the two, tailored to operational demands. By assessing workloads carefully and being willing to adjust strategies as requirements evolve, database professionals can design systems that are not only performant but also maintainable, scalable, and resilient in the face of changing business needs.

This article delves into strategies for optimizing long-running batch jobs, defining their significance across various…

As organizations increasingly gravitate towards cloud computing, multi-cloud database deployments are gaining traction, offering a…

A robust database backup strategy is crucial for protecting against potential data loss scenarios, ensuring…

About The Author

Ruby Palmer is a SQL Performance Tuning Specialist based in the United Kingdom, boasting over 8 years of experience in optimizing database performance and enhancing operational efficiency. With a deep understanding of SQL queries and performance metrics, Ruby has helped numerous organizations streamline their data management processes. Additionally, she is an integral contributor to the Emerald Sunrise Startup and Investment Site, where she shares practical strategies, investment insights, and real-world advice for founders and startups. Explore her insights at emeraldsunrise.com/, where Emerald Sunrise empowers entrepreneurs to build, grow, and scale their ventures with confidence.